Approach with caution: Crucial questions for the charity sector ChatGPT hype

We need to talk about ChatGPT. I’m seeing a lot of posts on charity support sites or LinkedIn with titles like “5 ways to make ChatGPT part of your digital strategy” or even shaming charities for not “getting on board” yet.

It’s easy to be dazzled by the promise of opportunities and hard to resist the urge to keep up. But in our sector especially, we should be looking into the ethics and details of any new tech first.

So before you do anything to your strategy, let’s be curious about what this new technology is, how it got made, and who is behind it.

Here’s four things to consider when assessing ChatGPT

(Plus plenty of sources for more much more info. #CitationsAreSolidarity)

1. AI is people

TIME’s exclusive reporting from Julia Zorthian & Billy Perrigo shared how OpenAI Used Kenyan Workers on Less Than $2 Per Hour to Make ChatGPT Less Toxic.

To keep ChatGPT “clean”, workers were paid poverty wages and repeatedly subjected to hateful and harmful content. The article describes it as, “much of that text appeared to have been pulled from the darkest recesses of the internet. Some of it described situations in graphic detail like child sexual abuse, bestiality, murder, suicide, torture, self harm, and incest.” There’s already a lawsuit over the harm consuming so much of this content caused.

It’s no good having equity and inclusion policies or speaking out on workers’ rights — and then swiftly embracing tools built on the exploitation of Black, Brown, and other racialised workers, or which profiteer off those behind the poverty line.

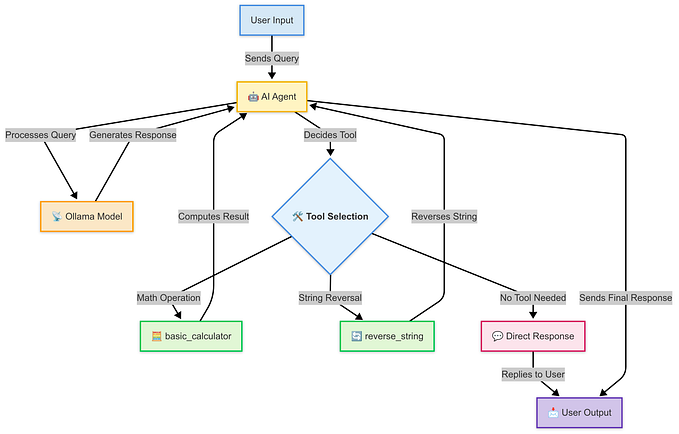

Fundamentally, the use of the “AI” tag to market these tools hides a lot of work. These tools are not artificial intelligence, building themselves — they are programs, built by humans on writings and work of other humans, and reviewed and edited by more people.

An excellent piece by Jathan Sadowski, Potemkin AI, dives further into the smokescreen of, “services that purport to be powered by sophisticated software, but actually rely on humans acting like robots”.

Minimising the role humans play can also be a marketing strategy to attract investment, and early adopters. Astra Taylor’s The automation charade dives into the gleam of advertising and then the capitalist narratives that use the threat of AI to drive down wages and weaken labour movements.

The article shares how “capitalism lives and grows by concealing certain kinds of work, refusing to pay for it, and pretending it’s not, in fact, work at all.” It is so important we recognise the hidden and undervalued labour in the products we use.

Very clever work has gone into ChatGPT and other similar tools, but always remember that people make them possible and they matter.

2. Let’s get our facts straight

In order to use the tool confidently in a professional setting, we should be able to rely on it for accurate answers. However, what ChatGPT spits back out is frequently biassed, inaccurate or simply wrong.

For example, on Twitter recently, people were playing around with exposing the sexism that ChatGPT generates in simple grammar questions around who the “she” in any given sentence might be. This one where ChatGPT ties itself in knots to say, “doctors cannot get pregnant” really got some laughs in my group chat (New birth control: Get a doctorate!) but it exposes the kind of bias it will be replicating.

If it can’t understand the presence of a “she” in a single sentence, how can it be used to write content or answer questions on complex social issues that charities work on? It’s no wonder it doesn’t put forward women as “experts” when asked for recommendations — another issue that keeps coming up.

Sexism is merely one blindspot among many in its programming. I’ve seen a wide variety of misinformation confidently served up: from crediting the wrong people for work, to glaring western bias in answers on history, religion and politics. To read more on this point I recommend Maggie Harrison’s ChatGPT Is Just an Automated Mansplaining Machine, a fantastic piece which hits it on the nose.

ChatGPT is also just wrong about actual facts all the time, making up numbers and stories as suits the prompt. Examples are easy to find: from a generated article including made-up quotes, to a lawyer in serious trouble for citing cases that didn’t exist in a court filing, all provided by ChatGPT.

What it often produces is something that sounds right, has the look of sense — but any expert can see through the facade and expose its cracks.

3. Consent is crucial

The company behind ChatGPT, OpenAI, also created DALL-E: a tool for generating images trained on millions of copyright images fed into its system without consent.

In an open letter from the Center for Artistic Inquiry and Reporting, they described tools like it as, “effectively the greatest art heist in history” as they ask artists, publishers, journalists, editors, and journalism union leaders to pledge against replacing human art with generative tools.

ChatGPT doesn’t stray far from this pattern of building on other people’s labour; OpenAI, the parent company, stays vague about the training data set used. However, investigative journalism from the Washington Post showed how sites like Patreon and Kickstarter were included, as well as many news agencies and personal blogs.

While the list has some very concerning flags on content sources (including the notoriously racist right-wing news outlet, Breitbart), it also has no proof of consent or copyright permissions for the inclusion of all this writing.

The screenwriters’ labour action in Hollywood right now is taking up the issue, battling against using their creative material to train machines or tech like ChatGPT being used as a replacement in the writing room.

Likewise, voice actors are taking a stand and mobilising against contracts asking them to sign away rights to their own voice so AI imitations can be made. These clauses allow the studios to have people, dead or alive, say words they never did or perhaps would never want to.

There are also already multiple court cases in play about suing the makers of these programs for using people’s content and creativity without consent. As Jess Weatherbed writes in the Verge, OpenAI’s regulatory troubles are only just beginning.

Whilst these very present workers’ rights battles are taking place, using content that is the result of plagiarism is a question of both legal risk and ethical integrity for the charity sector.

I’ve written elsewhere that consent is everything. It’s a principle we can’t devalue in one space and then defend as crucial in another. If you’re talking about healthcare, workers rights, human rights, gender issues, Queer rights — all of these and many more have a foundation of consent and bodily autonomy that we should work together to uphold.

4. Follow the money

Finally, it is worth being aware that OpenAI was founded by Elon Musk and Sam Altman, two vastly wealthy Silicon Valley “effective altruists”. This is a philanthropy philosophy that begins with a model of economic efficiency — what is the most cost-effective donation? What are the most lives saved for your buck?

Over time this philosophy has evolved to become increasingly focused on the lives of future generations and thus putting wealth into projects looking at “end of the world” scenarios (such as an AI take-over) rather than responding to the present injustices.

Those who fear the robot overlord apocalypse are often heavily involved in these AI technologies because they view it as best to be in the driving seat than allow others to “get it wrong”. Vox has an interesting piece on the growth and shifts within effective altruism if you want to read more.

There’s clearly a whole world of debate over this philosophy and I won’t go further into it here. I would simply say that, as with taking large corporate donations, it’s smart to dig into the money and ask where it comes from and if this aligns with your mission.

Questions to Ask Before Using ChatGPT

With all this in mind, any organisation looking to build ChatGPT and similar tools into their strategy should do so with real consideration of the issues involved and ask these questions:

- Is this compatible with our values and principles?

- Is the tool truly accurate and useful?

- Does this carry legal risk?

- Does this have reputational risk?

When the tech and business model is not honestly compatible with your vision for a better world, it’s important to be able to confidently make a choice against the tides of pressure.

This doesn’t mean there’s no space for tech tools and innovation in the charity sector (voice to text converters are amazing; I use readability checks on my work regularly), but we need to be open to understanding the full picture and curious enough to ask tough questions amidst the hype.

Hopefully this information, and the excellent journalism available to dive in deeper, will help you build a thoughtful approach to ChatGPT and similar tools for your charity going forwards.

For more curious conversations on topics like this, check out the podcast I co-host on technology and feminism, The Intersection of Things.